Race with AI, not against AI

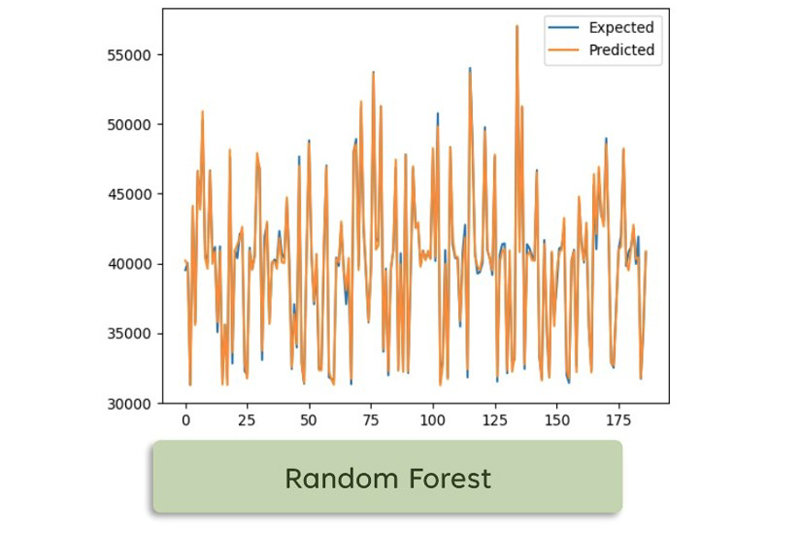

Despite their growing capabilities, all the generative AI tools struggle with building arguments based on theoretical frameworks, maintaining the coherence of the arguments, and providing appropriate references.

RMIT Lecturer Dr Nguyen Nhat Minh said, “This gives students a chance to build on the basics provided by AI. Instead of collecting information and forming basic arguments, students should take on a more nuanced role by combining AI outputs with relevant theories and real-world contexts.”

As for educators, it is pivotal that they embrace the integration of AI tools in their teaching methodologies.

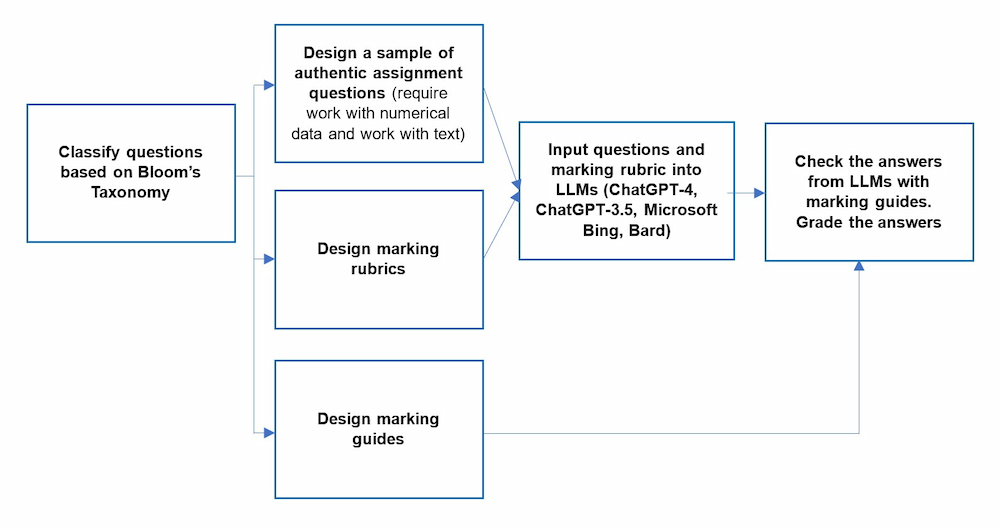

Rather than designing assessments and learning activities that solely aim to counteract the capabilities of generative AI, educators should focus on crafting authentic assessments that elevate students' higher-level cognitive skills, such as evaluation and creativity, while harnessing the advantages of AI technologies.

The goal is to develop an assessment environment that genuinely fosters learning and the development of critical skills in harmony with AI tools.

Dr Minh explained, “Such an environment would not only prepare students to think critically and creatively but also equip them with the ability to effectively use AI as a tool for innovation and problem solving.

“By doing so, we can ensure that education evolves in tandem with technological advancements, preparing students for a future where they can successfully collaborate with AI in various domains.”

Dr Binh reiterated that as generative AI becomes more prevalent in the workplace, professionals, especially those in data- and text-based fields, will see their roles transform significantly.

“This shift demands a proactive response. Educators and educational institutions need to review and consider redesigning their assessments, course outcomes and academic programs,” Dr Binh said.

The article “Race with the machines: Assessing the capability of generative AI in solving authentic assessments” is published in the Australasian Journal of Educational Technology (DOI: 10.14742/ajet.8902).

Story: Ngoc Hoang